Embarking on the monumental task of crawling millions of webpages demands more than just technical prowess - it requires a strategic approach that aligns with the dynamic nature of the digital landscape. In this guide, we unveil essential crawling millions webpages tips,providing a roadmap for efficiently navigating the vast expanse of the internet. From defining clear objectives to handling dynamic contentand embracing distributed crawling, these insights are the compass to guide you through the expansive landscape of web data extraction.

Make The Site Ready For Crawling

Before delving into web crawling, it is crucial to assess and optimize the website itself. Addressing potential issues that might impede a crawl's progress is a proactive step to ensure a smoother and more efficient process.

In the realm of large-scale web crawling, fixing issues before initiating the crawl might seem counterintuitive. However, when dealing with extensive websites, even minor problems can escalate when multiplied across millions of pages, resulting in significant obstacles.

Adam Humphreys, the founder of Making 8 Inc. digital marketing agency, offers a savvy solution for identifying factors affecting Time to First Byte (TTFB), a metric gauging a web server's responsiveness. TTFB measures the duration between a server receiving a request for a file and delivering the first byte to the browser, providing insights into server speed.

To assess TTFB accurately, Adam recommends using Google's PageSpeed Insights tool, leveraging Google's Lighthouse measurement technology. Core Web Vitalsoften highlight slow TTFB during page audits. For a precise TTFB reading, compare the server's raw text file to the actual website, isolating resources causing latency.

To identify problematic resources, Adam suggests adding placeholder content like Lorem Ipsum to a text file and measuring TTFB. Often, excessive plugins and JavaScriptin the source code contribute to latency. Refreshing Lighthouse scores multiple times and averaging the results helps account for variations caused by the ever-changing speed of data routing on the Internet.

Adam's meticulous approach involves assessing the website's responsiveness through averaged TTFB scores. If the server is unresponsive, the PageSpeed Insights tool provides insights into the underlying issues and suggests fixes, guiding users to optimize server performance before embarking on web crawling activities.

Politeness And Respect Robots.txt

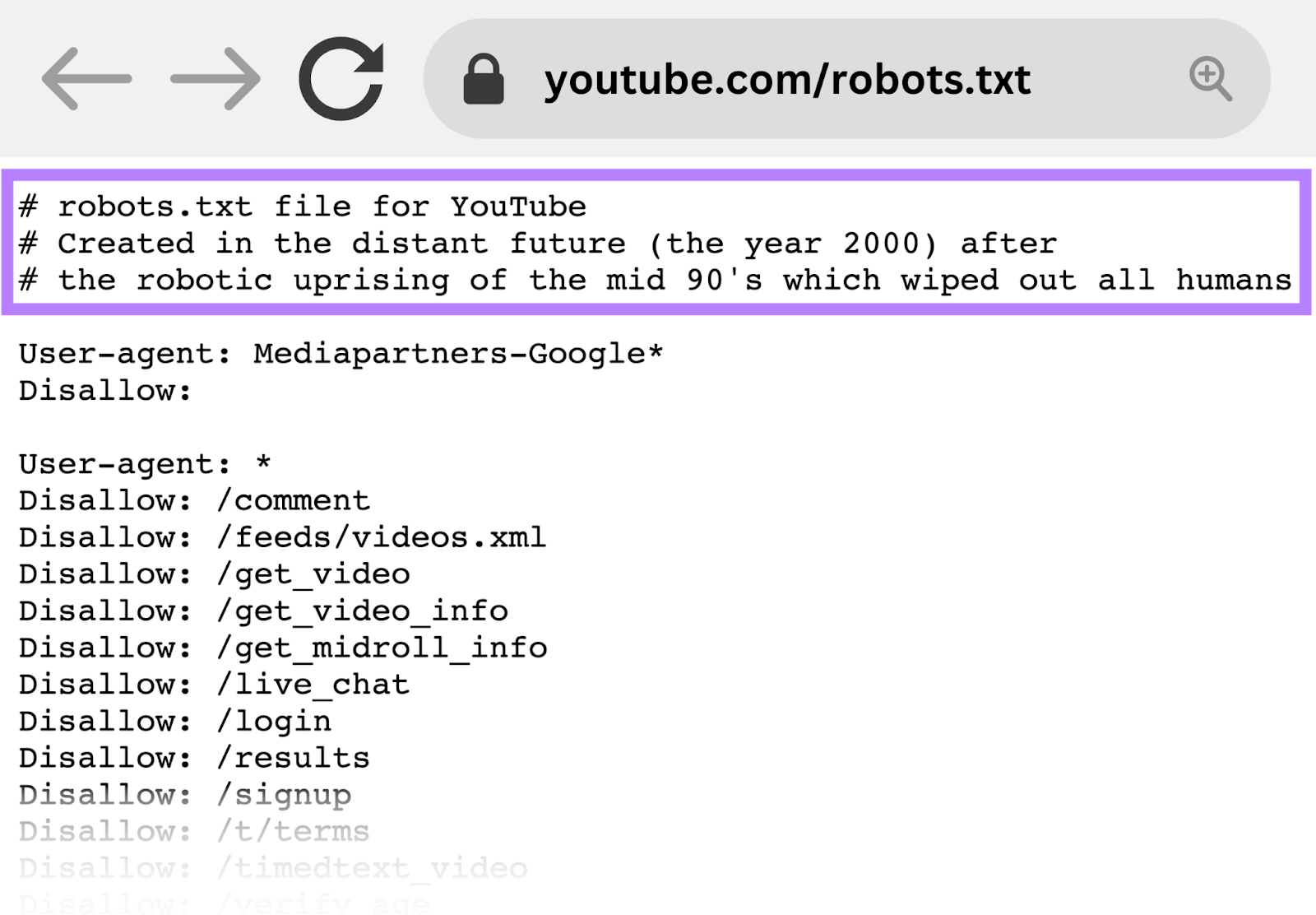

Maintaining a courteous and respectful approach to web crawling is imperative for building and sustaining positive relationships with website owners and administrators. One foundational aspect of this approach is the conscientious examination of the robots.txtfile associated with a given website.

The robots.txtfile serves as a virtual signpost, providing explicit guidelines on which sections of the website are accessible for crawling and, conversely, which areas are off-limits. This file is essentially a protocol that website administrators use to communicate with web crawlers, specifying the parameters within which their site can be explored. Disregarding these directives can not only strain relationships but may also result in legal repercussions.

By meticulously adhering to the rules outlined in the robots.txtfile, web crawlers demonstrate a commitment to ethical and responsible practices. This is not only an industry best practice but also a legal requirement in many jurisdictions. Understanding and respecting the limitations outlined in the robots.txtfile contributes to a more harmonious interaction between crawlers and website administrators.

It's worth noting that while robots.txtis a valuable tool for indicating which parts of a site to avoid, it doesn't provide foolproof protection against crawling. Some crawlers may choose to ignore these directives, and sensitive or private information should not solely rely on robots.txtfor protection.

Maintaining a considerate and compliant stance regarding the guidelines specified in the robots.txtfile is integral to responsible web crawling. This not only safeguards against potential legal ramifications but also fosters a collaborative atmosphere within the digital ecosystem. As the internet continues to evolve, upholding ethical standards becomes increasingly pivotal for the sustainable growth of web crawling practices.

Ensure Full Access To Server: Whitelist Crawler IP

Ensuring unimpeded access to a server is a critical facet of successful web crawling, and whitelisting the crawler's IP address is a strategic measure to overcome potential barriers. Firewalls and Content Delivery Networks (CDNs)are integral components of web security; however, they can inadvertently impede the progress of a web crawler by either blocking or slowing down access.

To guarantee seamless crawling, it is imperative to identify and address any security plugins, server-level intrusion prevention software, or CDNs that might pose obstacles. Understanding the intricacies of these security measures is essential for web crawlers aiming to navigate the digital landscape effectively.

In the context of WordPress, which powers a substantial portion of websites, several plugins facilitate the whitelisting process. Notable examples include Sucuri Web Application Firewall (WAF)and Wordfence, both of which are widely used to fortify the security posture of WordPresssites. These plugins offer functionality that allows administrators to add specific IP addresses to a whitelist, ensuring that the crawler's IP is granted unimpeded access.

Beyond WordPress, other web platforms may have similar security features, and it is incumbent upon web crawlers to identify and work with the relevant tools. By proactively addressing potential impediments to crawling at the server level, web crawlers can optimize their efficiency and minimize disruptions in the data extraction process.

It's important to note that while whitelisting the crawler's IP is a beneficial strategy, it should be approached with diligence. Implementing stringent security measures is crucial to protect websites from potential malicious activities, and administrators must strike a balance between facilitating crawling and maintaining robust security protocols.

Crawl During Off-Peak Hours

When orchestrating a website crawl, it is crucial to strike a balance between the need for data extraction and the impact on the server's performance. Ideally, web crawling should be unintrusive, allowing the server to seamlessly cater to both the aggressive crawling demands and the needs of actual site visitors.

In the best-case scenario, a robust server should adeptly manage aggressive crawling activities without compromising the user experiencefor site visitors. However, testing the server's response under load can provide valuable insights into its resilience and performance.

To assess the server's capability under stress, real-time analytics or access to server logs becomes indispensable. These tools allow immediate visibility into how the crawling process may be affecting site visitors. Keep a vigilant eye on the pace of crawling and instances of 503 server responses, as they can serve as indicators that the server is under strain.

If the server appears to be struggling to keep up with the crawling demands, it is prudent to take note of this response and strategically plan the crawl during off-peak hours. Choosing non-peak times for crawling minimizes the potential disruption to regular site visitors while ensuring that the crawling process remains efficient.

It's worth noting that Content Delivery Networks (CDNs) play a crucial role in mitigating the effects of an aggressive crawl. CDNs distribute content across multiple servers globally, reducing the load on a single server and enhancing the overall performance of the website.

Are There Server Errors?

When embarking on crawling an enterprise-level website, it becomes imperative to scrutinize any issues identified in the Crawl Stats report. These issues act as early warnings, pinpointing potential challenges that could impede the crawling process. Addressing and resolving these concerns proactively ensures a smoother and more effective web crawling experience.

Beyond the Crawl Stats report, delving into server error logs unveils a gold mine of data that can illuminate a spectrum of errors with the potential to impact the crawling efficiency. These logs provide a detailed account of server interactions, allowing web crawlers to identify, diagnose, and rectify issues that may hinder the seamless extraction of data.

Of particular significance is the ability to debug otherwise invisible PHP errors, which can be critical in maintaining the functionality and responsiveness of a website. PHP errors, if left unaddressed, can significantly disrupt the crawling process, potentially leading to incomplete or inaccurate data extraction.

Optimize URL Frontier Management

In the dynamic landscape of web crawling, the optimization of URL frontier management emerges as a pivotal strategy, playing a significant role in enhancing speed and resource utilization. To embark on a successful large-scale web crawling expedition, a meticulous approach to managing URLs is paramount.

- Establishing Efficiency -Efficiently managing the URLs within the scope of crawling operations is fundamental. The sheer volume of URLs can be overwhelming, and without a systematic approach, the crawling process may become inefficient and resource-intensive.

- Implementing Priority Queues -One key strategy is the implementation of priority queues, a mechanism that assigns priority levels to URLs based on predefined criteria. This ensures that high-priority URLs, often representing critical or frequently updated content, are processed first. Prioritization is especially crucial when dealing with time-sensitive data or when maintaining up-to-date information is of utmost importance.

- Scheduling Mechanisms -Complementing priority queues is the utilization of scheduling mechanisms. These mechanisms facilitate a structured and systematic approach to crawling by allocating specific time slotsor intervals for different categories of URLs. This not only optimizes resource utilization but also aids in maintaining a consistent and controlled crawling pace.

- Prompt Data Retrieval -The primary goal of optimizing URL frontier management is to enable the swift retrieval of critical data. By ensuring that high-priority URLs are addressed promptly, web crawlers can gather vital information without unnecessary delays. This agility is particularly advantageous when dealing with rapidly evolving content or time-critical updates.

- Reduction in Crawling Time -The cumulative effect of efficient URL frontier management is a significant reduction in overall crawling time. Prioritizing high-value URLs minimizes the time spent on less critical content, resulting in a more streamlined and expedited crawling process. This is of paramount importance when dealing with large-scale web crawling projects where time efficiency directly correlates with resource optimization.

Periodically Verify Your Crawl Data

One common anomaly that may arise during crawling is when the server is unable to respond to a request, leading to the generation of a 503 Service Unavailable server response message. Identifying such occurrences is pivotal as they can hinder the generation of comprehensive and accurate crawl data.

- Addressing Crawl Anomalies -Upon encountering crawl anomalies, it is beneficial to temporarily pause the crawl and conduct a thorough investigation. By doing so, web crawlers can uncover underlying issues that require attention before proceeding with the crawl. This proactive approach ensures that the subsequent crawl yields more reliable and useful information.

- Prioritizing Data Quality Over Crawl Completion -While the primary objective may be to complete the crawl successfully, it is crucial to recognize that the crawl process itself is a valuable data point. Rather than viewing a paused crawl as a setback, consider it an opportunity for discovery. The insights gained from addressing and resolving issues contribute to the overall improvement of the crawl's data quality.

- Embracing the Discovery Process -In the intricate realm of web crawling, the journey holds as much significance as the destination. Pausing a crawl to investigate and fix issues is not a hindrance but a valuable step in the discovery process. It allows web crawlers to refine their approach, enhance the accuracy of data extraction, and fortify the overall reliability of the crawl.

Connect To A Fast And Reliable Internet

When engaging in web crawling activities, especially within an office setting, the choice of internet connection can significantly impact the speed and efficiency of the crawling process. Opting for the fastest available internet connection is not merely a convenience; it can be the differentiating factor between a crawl completing in a matter of hours versus extending over several days.

Invariably, the fastest internet connections are achieved through ethernet rather than Wi-Fi. While this might seem like an inherent piece of advice, its significance cannot be overstated. Many users default to Wi-Fi without contemplating the substantial speed advantage offered by a direct ethernet connection.

Utilizing an ethernet connection can be particularly advantageous in an office environment where the volume of data being crawled is substantial. This choice becomes even more critical when time-sensitive crawling tasks are at hand, such as extracting real-time data or monitoring dynamic content changes.

For those relying on Wi-Fi, there's a practical workaround to harness the speed benefits of an ethernet connection. Moving a laptop or desktop closer to the Wi-Fi router can facilitate a direct ethernet connection, often found in the rear of the router. This small adjustment can yield a substantial boost in internet speed, translating to a more expeditious web crawling experience.

Handle Dynamic Content

In the contemporary landscape of web development, a significant number of websites heavily depend on dynamic content loaded via JavaScript. This poses a unique challenge for traditional web crawlers that may struggle to effectively parse and extract information from such dynamically generated pages. To adeptly handle this scenario, web crawlers can employ specialized techniques and tools tailored for navigating dynamic content.

Understanding The Challenge

Dynamic content, often orchestrated through JavaScript, can dynamically alter a webpage's structure and content even after the initial page load. This poses a challenge for traditional crawlers that typically rely on static HTML parsing. As a result, the intricate and ever-evolving nature of dynamic content necessitates a more sophisticated approach to ensure comprehensive data extraction.

Utilizing Headless Browsers

A strategic solution for handling dynamic content involves leveraging headless browsers. Unlike traditional browsers, headless browsers operate without a graphical user interface, making them well-suited for automated tasks such as web crawling. These browsers can interpret and execute JavaScript, enabling them to render and capture content dynamically loaded on a webpage.

Specialized Tools Like Selenium

Another powerful tool in the web crawler's arsenal for managing dynamic content is Selenium. Selenium is an open-source framework designed for browser automation. It allows web crawlers to interact with web pages, execute JavaScript, and dynamically retrieve information. Selenium's flexibility and versatility make it particularly valuable for navigating complex, JavaScript-heavy websites.

Considerations For Effective Implementation

When incorporating headless browsers or tools like Selenium, it's essential to consider the additional computational resources required for rendering dynamic content. This may impact the overall performance and speed of the crawling process. Striking a balance between efficiency and resource utilization becomes crucial, especially when dealing with large-scale web crawling projects.

Crawl Frontier Seed Expansion

A foundational principle in effective web crawling involves the continuous expansion of the initial set of seed URLs. The seed URLs act as the starting points for the crawler, guiding its exploration across the web landscape. By dynamically increasing this set, web crawlers can systematically venture into uncharted territories, discovering and indexing new pages that may not have been part of the original crawling scope.

The dynamic expansion of seed URLs is instrumental in maintaining the freshness of the crawled data. As websites evolve and introduce new content, an updated seed list facilitates the crawler's ability to adapt and capture emerging information. This approach ensures that the crawled data remains current and reflective of the dynamic nature of online content.

To align with the ever-evolving nature of the web, it is imperative to regularly update the seed list. This involves a proactive review and addition of new URLs based on emerging trends, content changes, or shifts in online structures. By staying attuned to these developments, web crawlers can adeptly capture and integrate evolving content, enriching the comprehensiveness of the crawled dataset.

The strategic expansion of the crawl frontier necessitates a thoughtful and dynamic implementation. This involves the judicious selection of additional seed URLs based on the objectives of the crawling project. Whether driven by topical relevance, industry shifts, or content diversity, the expansion should align with the overarching goals of the web crawling initiative.

Benefits Of Seed Expansion

The benefits of crawl frontier seed expansion extend beyond the mere discovery of new pages. It enhances the overall coverage of the crawling operation, ensuring that the crawler remains synchronized with the rapid pace of content evolution on the web. This approach is particularly valuable in scenarios where staying ahead of emerging trends or swiftly capturing time-sensitive information is paramount.

Server Memory

RAM is the powerhouse of a server's real-time operations, influencing its ability to efficiently process and serve web pages. An inadequacy in RAM can lead to a server's sluggish performance, impacting not only the user experience but also the crawling and indexing capabilities of search engines like Google.

- Identifying RAM-Related SEOIssues -When a server exhibits signs of slowness during a crawl or struggles to manage crawling tasks, it presents a potential SEOproblem. The efficiency with which Google crawls and indexes web pages is directly tied to the server's ability to handle the demands placed upon it during the crawling process.

- Assessing RAM Requirements -For a Virtual Private Server (VPS), a minimum of 1GB of RAM is typically necessary. However, for websites with high traffic, especially those functioning as online stores, a recommended range of 2GB to 4GB of RAM ensures optimal performance. Generally, the principle holds that more RAM translates to better server responsiveness and enhanced capabilities in handling concurrent crawling requests.

- Potential Culprits of Slowdowns -In instances where a server possesses an ample amount of RAM, yet experiences slowdowns, the issue may lie elsewhere. Inefficient software or plugins can contribute to excessive memory requirements, impeding the server's performance. A thorough examination of the server's software ecosystem becomes essential in pinpointing and rectifying these bottlenecks.

- Strategic Considerations for Enhanced Performance -To fortify a server's performance in the realm of web crawling, a holistic approach involves not only ensuring an adequate amount of RAM but also scrutinizing the efficiency of the server's software components. Proactive monitoring, regular software updates, and judicious management of plugins contribute to a resilient and high-performing server infrastructure.

Crawl For Site Structure Overview

A targeted approach to site structure analysis involves configuring the crawler to abstain from crawling external linksand internal images. By excluding these elements, the crawler streamlines its focus, directing efforts solely toward downloading URLs and capturing the intricate link structure within the site. This strategic exclusion accelerates the crawl process, enabling a rapid extraction of key structural insights.

- Optimizing Crawler Settings -Beyond limiting the crawler's scope to URLs and link structures, additional settings can be fine-tuned to enhance the efficiency of the overview-focused crawl. Unchecking specific crawler settings that are not integral to site structure analysis contributes to a faster crawl without compromising the quality of the extracted data.

- Focusing on URL and Link Structure -By prioritizing the download of URLs and the exploration of link structures, webmasters and SEOprofessionals gain a swift and insightful overview of a site's architecture. This focused approach facilitates a quicker assessment of key elements such as hierarchical organization, interlinking patterns, and the overall navigational framework of the website.

- Balancing Speed and Insight -While expediting the crawl process is advantageous for a site structure overview, it's crucial to strike a balance between speed and the depth of analysis. Careful consideration of which settings to adjust ensures that the crawler remains targeted in its efforts while still providing meaningful insights into the site's organization.

- Strategic Implementation -The implementation of a streamlined crawl for site structure analysis requires a strategic understanding of the website's objectives. Whether assessing navigational pathways, evaluating the effectiveness of internal linking, or simply gaining a quick overview of the site's hierarchy, the crawler's configuration should align with the specific goals of the analysis.

Effective Request Throttling

- Responsive Website Dynamics -The frequency of requests should be dynamically adjusted based on the responsiveness of the website. A well-tuned crawler recognizes the need to adapt its pace according to the server's ability to handle incoming requests. This adaptive approach ensures that the crawling process remains efficient without imposing undue strain on the server.

- Robots.txt Compliance -Thoroughly understanding and adhering to the directives specified in the robots.txt file is fundamental. This file serves as a guidepost, delineating the dos and don'ts for web crawlers. Adjusting the request frequency in alignment with the specified limitations not only ensures compliance with ethical crawling practices but also fosters a harmonious relationship between crawlers and website administrators.

- Respectful Crawling Etiquette -Request throttling is not merely a technical adjustment; it embodies a commitment to respectful crawling etiquette. By avoiding aggressive and disruptive crawling behavior, web crawlers contribute to the overall health and performance of the websites they explore. This approach reflects positively on the crawler's reputation and minimizes the risk of encountering access restrictions.

- Adaptive Throttling Algorithms -Employing adaptive throttling algorithms enhances the precision of the crawling process. These algorithms can dynamically adjust the crawl rate based on real-time server responses, ensuring optimal efficiency while minimizing the likelihood of causing server strain. This level of sophistication is particularly beneficial when dealing with websites of varying sizes and complexities.

Effective Duplicate Detection

Addressing the challenge of duplicate content is paramount in the realm of large-scale crawling. It's imperative to implement robust algorithms that not only detect but also efficiently eliminate duplicate pages. This not only saves valuable storage space and processing power but is particularly crucial when dealing with dynamic content sources like news articles, forums, and e-commercewebsites.

Algorithmic Precision

Utilize sophisticated algorithms capable of precise duplicate detection. These algorithms should consider various factors, including content similarity, structure, and context, ensuring accurate identification and elimination of duplicates.

Resource Conservation

The elimination of duplicate pages translates to significant savings in both storage space and processing power. This resource conservation is vital for maintaining the efficiency and scalability of large-scale crawling operations.

Context-Specific Challenges

Tailor duplicate detection strategies to the unique challenges posed by different content types. News articles, forums, and e-commerce platforms often present distinct challenges, requiring customized approaches for the effective management of duplicate content.

Real-Time Adaptability

Implement systems that can adapt to real-time changes in content. Dynamic sources, such as news articles, frequent updates, and forums witness ongoing discussions. An adaptive approach ensures continuous efficiency in duplicate detection.

User Experience Impact

Recognize the impact of duplicate content on user experience, especially for e-commerce websites. Ensuring a streamlined and unique content presentation not only aids in SEO but also enhances the overall user experience and trust in the platform.

See What Google Sees

A crucial concept in this realm is the "crawl budget," representing the resources Google allocates to crawl and index a website. The higher the number of successfully indexed pages, the greater the opportunity for these pages to rank in search results.

For smaller websites, concerns about Google's crawl budget may be minimal. However, for enterprise-level websites, maximizing this crawl budget becomes a top priority. Unlike scenarios where ignoring noindex tags is advised, as seen in previous illustrations, a unique approach is warranted here. Comprehending how Google perceives the entire website is the primary goal of this particular crawl.

Google Search Consoleundoubtedly provides a wealth of information, but conducting a self-crawl with a user agent disguised as Google offers an unparalleled opportunity. This approach allows for a comprehensive snapshot of the website, revealing insights that can enhance the indexing of relevant pages while pinpointing potential areas where Google's crawl budget might be inefficiently utilized.

In executing this type of crawl, meticulous configuration is essential. The crawler's user agent should be set to Googlebot, adhering to robots.txt directives, and specifically obeying the noindex directive. This precision ensures that the crawl mirrors Google's experience, shedding light on how the site is presented to Googlebot and exposing any discrepancies that may hinder optimal crawling.

Setting the stage for diagnostic prowess, this method enables the identification of overlooked pages that merit crawling attention. It becomes particularly valuable in uncovering pages that, although useful to users, may be deemed of lower quality by Google - such as those containing sign-up forms.

People Also Ask

Why Is Website Crawling Important For SEO?

Website crawling is essential for SEO as it allows search engines to discover, index, and rank web pages. It enables search engines to understand the content and structure of a site, influencing its visibility in search results.

How Often Does Google Crawl Websites?

The frequency of Google's crawls varies based on factors like site popularity and content updates. High-quality and frequently updated sites may be crawled more often, but there's no fixed schedule for all websites.

What Is The Impact Of Page Load Speed On Crawling?

Page load speed can affect crawling. Slow-loading pages may lead to incomplete crawls as search engines allocate finite resources. Optimizing page speed enhances the chances of comprehensive crawling.

Can Too Many Redirects Affect Crawling And Indexing?

Yes, excessive redirects can impact crawling and indexing. Each redirect introduces latency, potentially slowing down the crawling process. It's advisable to minimize unnecessary redirects for optimal search engineperformance.

How Can I Check If My Website Is Being Crawled By Search Engines?

Utilize tools like Google Search Console or Bing Webmaster Tools to monitor crawl activity. These platforms provide insights into how search engines interact with your site, highlighting any crawl errors or issues.

Final Thoughts

As you navigate the ever-evolving terrain of web crawling, armed with the invaluable insights gleaned from our crawling millions webpages tips, you are poised for success in the data extraction realm. By prioritizing URLs, embracing strategic planning, and respecting ethical considerations, your web crawling endeavors are not just efficient but also sustainable. The journey to unraveling the vast expanse of web data may be intricate, but with these tips, you possess the key to unlocking a wealth of information effectively and responsibly.